Thomas' Calculus and Linear Algebra and Its Applications Package for the Georgia Institute of Technology, 1/e

5th Edition

ISBN: 9781323132098

Author: Thomas, Lay

Publisher: PEARSON C

expand_more

expand_more

format_list_bulleted

Textbook Question

Chapter 10.3, Problem 25E

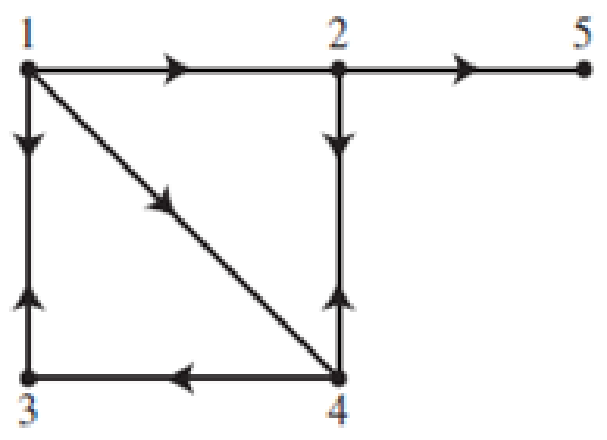

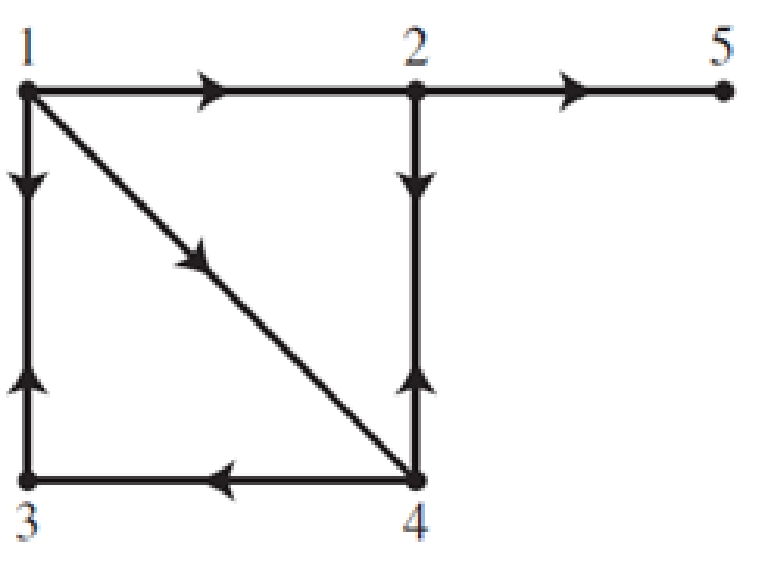

The following set of webpages hyperlinked by the directed graph was studied in Section 10.2, Exercise 25.

Consider randomly surfing on this set of webpages using the Google matrix as the transition matrix.

- a. Show that this Markov chain is irreducible.

- b. Suppose the surfer starts at page 1. How many mouse clicks on average must the surfer make to get back to page 1?

In Exercises 25 and 26. consider a set of webpages hyperlinked by the given directed graph. Find the Google matrix for each graph and compute the PageRank of each page in the set.

25.

Expert Solution & Answer

Want to see the full answer?

Check out a sample textbook solution

Students have asked these similar questions

Find the Laplace Transform of the function to express it in frequency domain form.

Please draw a graph that represents the system of equations f(x) = x2 + 2x + 2 and g(x) = –x2 + 2x + 4?

Given the following system of equations and its graph below, what can be determined about the slopes and y-intercepts of the system of equations?

7

y

6

5

4

3

2

-6-5-4-3-2-1

1+

-2

1 2 3 4 5 6

x + 2y = 8

2x + 4y = 12

The slopes are different, and the y-intercepts are different.

The slopes are different, and the y-intercepts are the same.

The slopes are the same, and the y-intercepts are different.

O The slopes are the same, and the y-intercepts are the same.

Chapter 10 Solutions

Thomas' Calculus and Linear Algebra and Its Applications Package for the Georgia Institute of Technology, 1/e

Ch. 10.1 - Fill in the missing entries in the stochastic...Ch. 10.1 - Prob. 2PPCh. 10.1 - In Exercises 1 and 2, determine whether P is a...Ch. 10.1 - In Exercises 1 and 2, determine whether P is a...Ch. 10.1 - Prob. 3ECh. 10.1 - Prob. 4ECh. 10.1 - In Exercises 5 and 6, the transition matrix P for...Ch. 10.1 - Prob. 6ECh. 10.1 - In Exercises 7 and 8, the transition matrix P for...Ch. 10.1 - In Exercises 7 and 8, the transition matrix P for...

Ch. 10.1 - Consider a pair of Ehrenfest urns labeled A and B....Ch. 10.1 - Consider a pair of Ehrenfest urns labeled A and B....Ch. 10.1 - Consider an unbiased random walk on the set...Ch. 10.1 - Consider a biased random walk on the set {1,2,3,4}...Ch. 10.1 - In Exercises 13 and 14, find the transition matrix...Ch. 10.1 - In Exercises 13 and 14, find the transition matrix...Ch. 10.1 - In Exercises 15 and 16, find the transition matrix...Ch. 10.1 - In Exercises 15 and 16, find the transition matrix...Ch. 10.1 - The mouse is placed in room 2 of the maze shown...Ch. 10.1 - The mouse is placed in room 3 of the maze shown...Ch. 10.1 - Prob. 19ECh. 10.1 - In Exercises 19 and 20, suppose a mouse wanders...Ch. 10.1 - Prob. 21ECh. 10.1 - In Exercises 21 and 22, mark each statement True...Ch. 10.1 - The weather in Charlotte, North Carolina, can be...Ch. 10.1 - Suppose that whether it rains in Charlotte...Ch. 10.1 - Prob. 25ECh. 10.1 - Consider a set of five webpages hyperlinked by the...Ch. 10.1 - Consider a model for signal transmission in which...Ch. 10.1 - Consider a model for signal transmission in which...Ch. 10.1 - Prob. 29ECh. 10.1 - Another model for diffusion is called the...Ch. 10.1 - To win a game in tennis, one player must score...Ch. 10.1 - Volleyball uses two different scoring systems in...Ch. 10.1 - Prob. 33ECh. 10.2 - Consider the Markov chain on {1, 2, 3} with...Ch. 10.2 - In Exercises 1 and 2, consider a Markov chain on...Ch. 10.2 - Prob. 2ECh. 10.2 - In Exercises 3 and 4, consider a Markov chain on...Ch. 10.2 - Prob. 4ECh. 10.2 - Prob. 5ECh. 10.2 - In Exercises 5 and 6, find the matrix to which Pn...Ch. 10.2 - In Exercises 7 and 8, determine whether the given...Ch. 10.2 - Prob. 8ECh. 10.2 - Consider a pair of Ehrenfest urns with a total of...Ch. 10.2 - Consider a pair of Ehrenfest urns with a total of...Ch. 10.2 - Consider an unbiased random walk with reflecting...Ch. 10.2 - Consider a biased random walk with reflecting...Ch. 10.2 - Prob. 13ECh. 10.2 - In Exercises 13 and 14, consider a simple random...Ch. 10.2 - In Exercises 15 and 16, consider a simple random...Ch. 10.2 - In Exercises 15 and 16, consider a simple random...Ch. 10.2 - Prob. 17ECh. 10.2 - Prob. 18ECh. 10.2 - Prob. 19ECh. 10.2 - Consider the mouse in the following maze, which...Ch. 10.2 - In Exercises 21 and 22, mark each statement True...Ch. 10.2 - In Exercises 21 and 22, mark each statement True...Ch. 10.2 - Prob. 23ECh. 10.2 - Suppose that the weather in Charlotte is modeled...Ch. 10.2 - In Exercises 25 and 26, consider a set of webpages...Ch. 10.2 - In Exercises 25 and 26, consider a set of webpages...Ch. 10.2 - Prob. 27ECh. 10.2 - Consider beginning with an individual of known...Ch. 10.2 - Prob. 29ECh. 10.2 - Consider the Bernoulli-Laplace diffusion model...Ch. 10.2 - Prob. 31ECh. 10.2 - Prob. 32ECh. 10.2 - Prob. 33ECh. 10.2 - Let 0 p, q 1, and define P = [p1q1pq] a. Show...Ch. 10.2 - Let 0 p, q 1, and define P = [pq1pqq1pqp1pqpq]...Ch. 10.2 - Let A be an m m stochastic matrix, let x be in m...Ch. 10.2 - Prob. 37ECh. 10.2 - Consider a simple random walk on a finite...Ch. 10.2 - Prob. 39ECh. 10.3 - Consider the Markov chain on {1, 2, 3, 4} with...Ch. 10.3 - Prob. 1ECh. 10.3 - In Exercises 16, consider a Markov chain with...Ch. 10.3 - Prob. 3ECh. 10.3 - Prob. 4ECh. 10.3 - Prob. 5ECh. 10.3 - Prob. 6ECh. 10.3 - Consider the mouse in the following maze from...Ch. 10.3 - Prob. 8ECh. 10.3 - Prob. 9ECh. 10.3 - Prob. 10ECh. 10.3 - Prob. 11ECh. 10.3 - Consider an unbiased random walk with absorbing...Ch. 10.3 - In Exercises 13 and 14, consider a simple random...Ch. 10.3 - Prob. 14ECh. 10.3 - In Exercises 15 and 16, consider a simple random...Ch. 10.3 - In Exercises 15 and 16, consider a simple random...Ch. 10.3 - Consider the mouse in the following maze from...Ch. 10.3 - Consider the mouse in the following maze from...Ch. 10.3 - Prob. 19ECh. 10.3 - In Exercises 19 and 20, consider the mouse in the...Ch. 10.3 - Prob. 21ECh. 10.3 - Prob. 22ECh. 10.3 - Suppose that the weather in Charlotte is modeled...Ch. 10.3 - Prob. 24ECh. 10.3 - The following set of webpages hyperlinked by the...Ch. 10.3 - The following set of webpages hyperlinked by the...Ch. 10.3 - Prob. 27ECh. 10.3 - Prob. 28ECh. 10.3 - Prob. 29ECh. 10.3 - Prob. 30ECh. 10.3 - Prob. 31ECh. 10.3 - Prob. 32ECh. 10.3 - Prob. 33ECh. 10.3 - In Exercises 33 and 34, consider the Markov chain...Ch. 10.3 - Prob. 35ECh. 10.3 - Prob. 36ECh. 10.4 - Consider the Markov chain on {1, 2, 3, 4} with...Ch. 10.4 - In Exercises 1-6, consider a Markov chain with...Ch. 10.4 - In Exercises 1-6, consider a Markov chain with...Ch. 10.4 - In Exercises 1-6, consider a Markov chain with...Ch. 10.4 - In Exercises 1-6, consider a Markov chain with...Ch. 10.4 - In Exercises 1-6, consider a Markov chain with...Ch. 10.4 - In Exercises 1-6, consider a Markov chain with...Ch. 10.4 - In Exercises 7-10, consider a simple random walk...Ch. 10.4 - In Exercises 7-10, consider a simple random walk...Ch. 10.4 - In Exercises 7-10, consider a simple random walk...Ch. 10.4 - In Exercises 7-10: consider a simple random walk...Ch. 10.4 - Reorder the states in the Markov chain in Exercise...Ch. 10.4 - Reorder the states in the Markov chain in Exercise...Ch. 10.4 - Reorder the states in the Markov chain in Exercise...Ch. 10.4 - Prob. 14ECh. 10.4 - Prob. 15ECh. 10.4 - Prob. 16ECh. 10.4 - Find the transition matrix for the Markov chain in...Ch. 10.4 - Find the transition matrix for the Markov chain in...Ch. 10.4 - Consider the mouse in the following maze from...Ch. 10.4 - Consider the mouse in the following maze from...Ch. 10.4 - In Exercises 21-22, mark each statement True or...Ch. 10.4 - In Exercises 21-22, mark each statement True or...Ch. 10.4 - Confirm Theorem 5 for the Markov chain in Exercise...Ch. 10.4 - Prob. 24ECh. 10.4 - Consider the Markov chain on {1, 2, 3} with...Ch. 10.4 - Follow the plan of Exercise 25 to confirm Theorem...Ch. 10.4 - Prob. 27ECh. 10.4 - Prob. 28ECh. 10.4 - Prob. 29ECh. 10.5 - Prob. 1PPCh. 10.5 - Consider a Markov chain on {1, 2, 3, 4} with...Ch. 10.5 - Prob. 1ECh. 10.5 - Prob. 2ECh. 10.5 - In Exercises 13, find the fundamental matrix of...Ch. 10.5 - Prob. 4ECh. 10.5 - Prob. 5ECh. 10.5 - Prob. 6ECh. 10.5 - Prob. 7ECh. 10.5 - Prob. 8ECh. 10.5 - Prob. 9ECh. 10.5 - Prob. 10ECh. 10.5 - Prob. 11ECh. 10.5 - Prob. 12ECh. 10.5 - Consider a simple random walk on the following...Ch. 10.5 - Consider a simple random walk on the following...Ch. 10.5 - Prob. 15ECh. 10.5 - Prob. 16ECh. 10.5 - Prob. 17ECh. 10.5 - Prob. 18ECh. 10.5 - Prob. 19ECh. 10.5 - Consider the mouse in the following maze from...Ch. 10.5 - In Exercises 21 and 22, mark each statement True...Ch. 10.5 - Prob. 22ECh. 10.5 - Suppose that the weather in Charlotte is modeled...Ch. 10.5 - Suppose that the weather in Charlotte is modeled...Ch. 10.5 - Consider a set of webpages hyperlinked by the...Ch. 10.5 - Consider a set of webpages hyperlinked by the...Ch. 10.5 - Exercises 27-30 concern the Markov chain model for...Ch. 10.5 - Exercises 27-30 concern the Markov chain model for...Ch. 10.5 - Exercises 27-30 concern the Markov chain model for...Ch. 10.5 - Exercises 27-30 concern the Markov chain model for...Ch. 10.5 - Exercises 31-36 concern the two Markov chain...Ch. 10.5 - Exercises 31-36 concern the two Markov chain...Ch. 10.5 - Exercises 31-36 concern the two Markov chain...Ch. 10.5 - Prob. 34ECh. 10.5 - Prob. 35ECh. 10.5 - Prob. 36ECh. 10.5 - Consider a Markov chain on {1, 2, 3, 4, 5, 6} with...Ch. 10.5 - Consider a Markov chain on {1,2,3,4,5,6} with...Ch. 10.5 - Prob. 39ECh. 10.6 - Let A be the matrix just before Example 1. Explain...Ch. 10.6 - Prob. 2PPCh. 10.6 - Prob. 1ECh. 10.6 - Prob. 2ECh. 10.6 - Prob. 3ECh. 10.6 - Prob. 4ECh. 10.6 - Prob. 5ECh. 10.6 - Prob. 6ECh. 10.6 - Major League batting statistics for the 2006...Ch. 10.6 - Prob. 8ECh. 10.6 - Prob. 9ECh. 10.6 - Prob. 10ECh. 10.6 - Prob. 11ECh. 10.6 - Prob. 12ECh. 10.6 - Prob. 14ECh. 10.6 - Prob. 15ECh. 10.6 - Prob. 16ECh. 10.6 - Prob. 17ECh. 10.6 - In the previous exercise, let p be the probability...

Knowledge Booster

Learn more about

Need a deep-dive on the concept behind this application? Look no further. Learn more about this topic, algebra and related others by exploring similar questions and additional content below.Similar questions

- Choose the function to match the graph. -2- 0 -7 -8 -9 --10- |--11- -12- f(x) = log x + 5 f(x) = log x - 5 f(x) = log (x+5) f(x) = log (x-5) 9 10 11 12 13 14arrow_forwardWhich of the following represents the graph of f(x)=3x-2? 7 6 5 4 ++ + + -7-6-5-4-3-2-1 1 2 3 4 5 6 7 -2 3 -5 6 -7 96 7 5 4 O++ -7-6-5-4-3-2-1 -2 -3 -4 -5 -7 765 432 -7-6-5-4-3-2-1 -2 ++ -3 -4 -5 -6 2 3 4 5 6 7 7 6 2 345 67 -7-6-5-4-3-2-1 2 3 4 5 67 4 -5arrow_forward13) Let U = {j, k, l, m, n, o, p} be the universal set. Let V = {m, o,p), W = {l,o, k}, and X = {j,k). List the elements of the following sets and the cardinal number of each set. a) W° and n(W) b) (VUW) and n((V U W)') c) VUWUX and n(V U W UX) d) vnWnX and n(V WnX)arrow_forward

- 9) Use the Venn Diagram given below to determine the number elements in each of the following sets. a) n(A). b) n(A° UBC). U B oh a k gy ท W z r e t ་ Carrow_forward10) Find n(K) given that n(T) = 7,n(KT) = 5,n(KUT) = 13.arrow_forward7) Use the Venn Diagram below to determine the sets A, B, and U. A = B = U = Blue Orange white Yellow Black Pink Purple green Grey brown Uarrow_forward

- 1) Use the roster method to list the elements of the set consisting of: a) All positive multiples of 3 that are less than 20. b) Nothing (An empty set).arrow_forward2) Let M = {all postive integers), N = {0,1,2,3... 100), 0= {100,200,300,400,500). Determine if the following statements are true or false and explain your reasoning. a) NCM b) 0 C M c) O and N have at least one element in common d) O≤ N e) o≤o 1arrow_forward4) Which of the following universal sets has W = {12,79, 44, 18) as a subset? Choose one. a) T = {12,9,76,333, 44, 99, 1000, 2} b) V = {44,76, 12, 99, 18,900,79,2} c) Y = {76,90, 800, 44, 99, 55, 22} d) x = {79,66,71, 4, 18, 22,99,2}arrow_forward

arrow_back_ios

SEE MORE QUESTIONS

arrow_forward_ios

Recommended textbooks for you

Elementary Linear Algebra (MindTap Course List)AlgebraISBN:9781305658004Author:Ron LarsonPublisher:Cengage Learning

Elementary Linear Algebra (MindTap Course List)AlgebraISBN:9781305658004Author:Ron LarsonPublisher:Cengage Learning Linear Algebra: A Modern IntroductionAlgebraISBN:9781285463247Author:David PoolePublisher:Cengage Learning

Linear Algebra: A Modern IntroductionAlgebraISBN:9781285463247Author:David PoolePublisher:Cengage Learning

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:9781305658004

Author:Ron Larson

Publisher:Cengage Learning

Linear Algebra: A Modern Introduction

Algebra

ISBN:9781285463247

Author:David Poole

Publisher:Cengage Learning

Finite Math: Markov Chain Example - The Gambler's Ruin; Author: Brandon Foltz;https://www.youtube.com/watch?v=afIhgiHVnj0;License: Standard YouTube License, CC-BY

Introduction: MARKOV PROCESS And MARKOV CHAINS // Short Lecture // Linear Algebra; Author: AfterMath;https://www.youtube.com/watch?v=qK-PUTuUSpw;License: Standard Youtube License

Stochastic process and Markov Chain Model | Transition Probability Matrix (TPM); Author: Dr. Harish Garg;https://www.youtube.com/watch?v=sb4jo4P4ZLI;License: Standard YouTube License, CC-BY