Bagging and Boosting

Computer Networking: A Top-Down Approach (7th Edition)

7th Edition

ISBN:9780133594140

Author:James Kurose, Keith Ross

Publisher:James Kurose, Keith Ross

Chapter1: Computer Networks And The Internet

Section: Chapter Questions

Problem R1RQ: What is the difference between a host and an end system? List several different types of end...

Related questions

Question

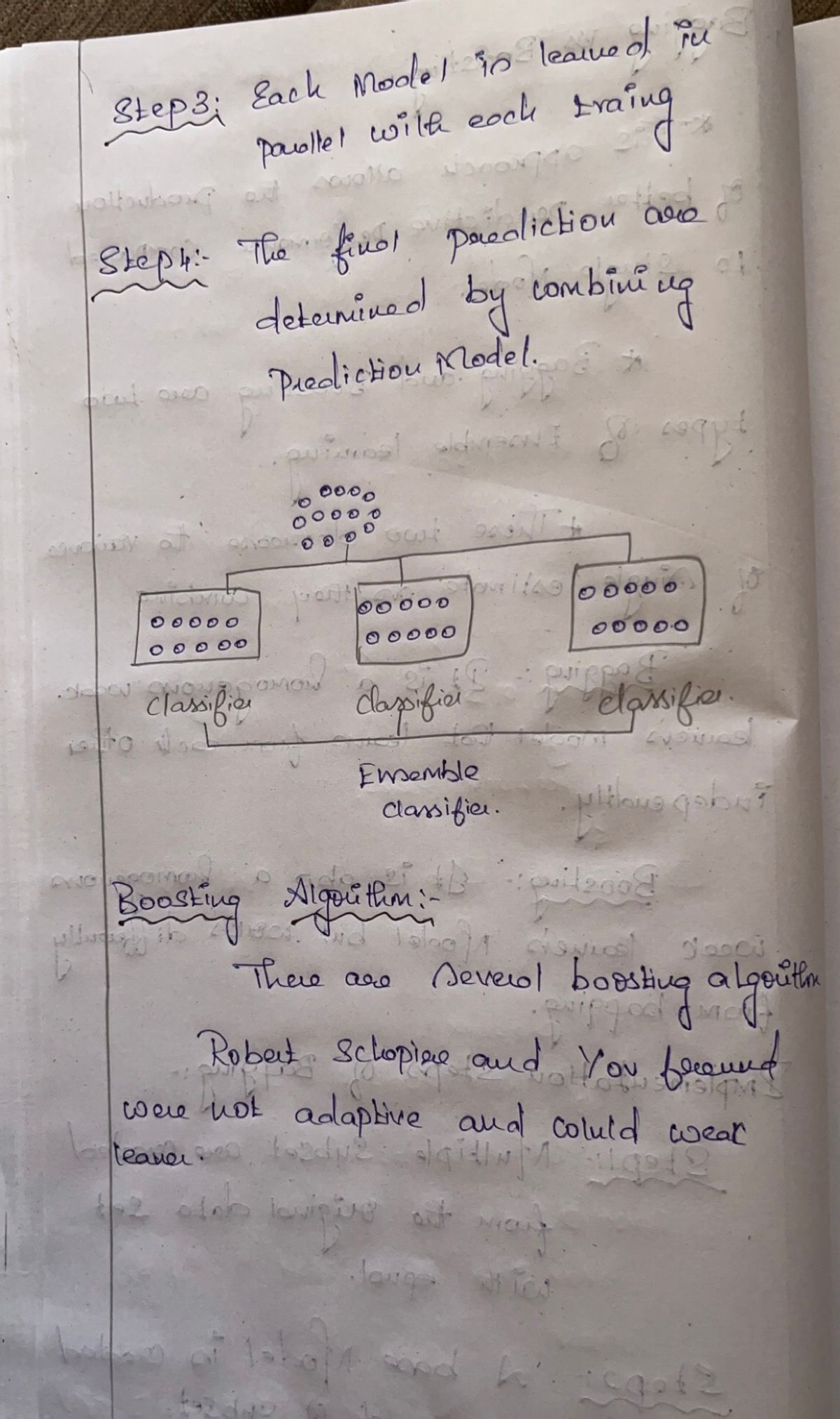

Transcribed Image Text:**Q6 (18 pts): Bagging and Boosting**

Bagging is generally used to help stabilize classifiers with unstable learning algorithms (optimal score searching algorithms). A classifier has a stable learning algorithm if, by changing the training data, the predicted class labels in the test data don’t change. For instance, the predictions of a decision tree might significantly change with a small change in the training data. This definition depends on the amount of data, of course. Classifiers that are unstable with \(10^3\) training examples may be stable with \(10^9\) examples. Bagging works by aggregating the answers of unstable classifiers trained over multiple training datasets. These multiple datasets are often not independent, generally sampled with replacement from the same training data.

Boosting works by converting weak classifier (very simple models) to strong ones (models that can describe complex relationships between the inputs and the class labels). A weak learner is a classifier whose output of an test example attributes \(x_i\) is only slightly correlated with its true class \(t_i\). That is, the weak learner classifies the data better than random, but not much better than random. In boosting, weak learners are trained sequentially in a way that the current learner gives more emphasis to the examples that past learns made mistakes on.

1. **(8 pts)** Suppose we decide to use a large deep feedforward network as a classifier with a small training dataset. Assume the network can perfectly fit the training data but we want to make sure it is accurate in our test data (without having access to the test data). Would you use boosting or bagging to help improve the classification accuracy? Describe what would be the problem of using the other approach.

Expert Solution

Step 1

Step by step

Solved in 2 steps with 3 images

Recommended textbooks for you

Computer Networking: A Top-Down Approach (7th Edi…

Computer Engineering

ISBN:

9780133594140

Author:

James Kurose, Keith Ross

Publisher:

PEARSON

Computer Organization and Design MIPS Edition, Fi…

Computer Engineering

ISBN:

9780124077263

Author:

David A. Patterson, John L. Hennessy

Publisher:

Elsevier Science

Network+ Guide to Networks (MindTap Course List)

Computer Engineering

ISBN:

9781337569330

Author:

Jill West, Tamara Dean, Jean Andrews

Publisher:

Cengage Learning

Computer Networking: A Top-Down Approach (7th Edi…

Computer Engineering

ISBN:

9780133594140

Author:

James Kurose, Keith Ross

Publisher:

PEARSON

Computer Organization and Design MIPS Edition, Fi…

Computer Engineering

ISBN:

9780124077263

Author:

David A. Patterson, John L. Hennessy

Publisher:

Elsevier Science

Network+ Guide to Networks (MindTap Course List)

Computer Engineering

ISBN:

9781337569330

Author:

Jill West, Tamara Dean, Jean Andrews

Publisher:

Cengage Learning

Concepts of Database Management

Computer Engineering

ISBN:

9781337093422

Author:

Joy L. Starks, Philip J. Pratt, Mary Z. Last

Publisher:

Cengage Learning

Prelude to Programming

Computer Engineering

ISBN:

9780133750423

Author:

VENIT, Stewart

Publisher:

Pearson Education

Sc Business Data Communications and Networking, T…

Computer Engineering

ISBN:

9781119368830

Author:

FITZGERALD

Publisher:

WILEY